Sameer Ambekar

Deep learning | Computer vision

I am pursuing my Ph.D. (Doctoral Researcher) at Technical University of Munich (TU Munich) and Helmholtz Munich in Deep learning under Prof. Dr. Julia Schnabel, Dr. Daniel Lang. My academic journey includes Masters in Artificial Intelligence (MSc AI) at the University of Amsterdam (UvA) and also worked as Research Intern at the university research AIM lab, UvA. For MSc AI thesis, I addressed Test-Time Adaptation of classifiers for Domain Generalization utilizing Meta learning, Variational inference for efficient multi-source training and transferrable features under Prof. dr. Cees Snoek, Prof. Xiantong Zhen and Zehao Xiao.

Before MSc AI, I worked as Research Assistant (RA) at IIT Delhi India under Prof. Prathosh A.P. in domain adaptation through Generative Latent search in the VAE latent space. Before working as RA at IITD, I worked at Indian Council of Medical Research (ICMR) as a Researcher under Dr. Subarna Roy (Scientist G), and Mr. Pramod Kumar (Scientist C).

Additionally, I also serve as a Reviewer at top-tier conferences and journals such as NeurIPS, CVPR, ICML, ECCV, ICCV, WACV, IEEE Transactions on Neural Networks and Learning Systems, Elsevier Applied soft computing. Furthermore, serve as a Mentor for Neuromatch deep learning course.

MSc Thesis Opportunity: We are looking for an MSc thesis student to address distribution shifts in medical imaging. Kindly refer to the PDF proposal for more information.

News

The Test-time adaptation methods proposed in my MSc AI thesis, University of Amsterdam have been accepted at WACV 2025 and CoLLAs 2024.

Our work won the Best paper award at MICCAIw ADSMI which addresses 'Selective Test-time adaptation'.

Attended ICVSS Computer vision summer school at Sicily, Italy.

Attended EEML Machine learning 2023 summer school by Google Deepmind

Research Goal & Publications

I am interested in Test-time adaptation and Unsupervised learning.

Utilizing learned representations and patterns to transfer to unseen domains although high-dimensional and streaming batches of data with distribution shifts.

My efforts have focused on addressing problems in Test-time adaptation, Domain Generalization, Domain Adaptation through Variational inference, meta learning, surrogate model updates and model predictions.

Layer-wise Test-time Adaptation to Dynamic Shifts

Precise Test-time detection

Test-Time adaptation: Non-Parametric, Backprop-free and entirely feedforward

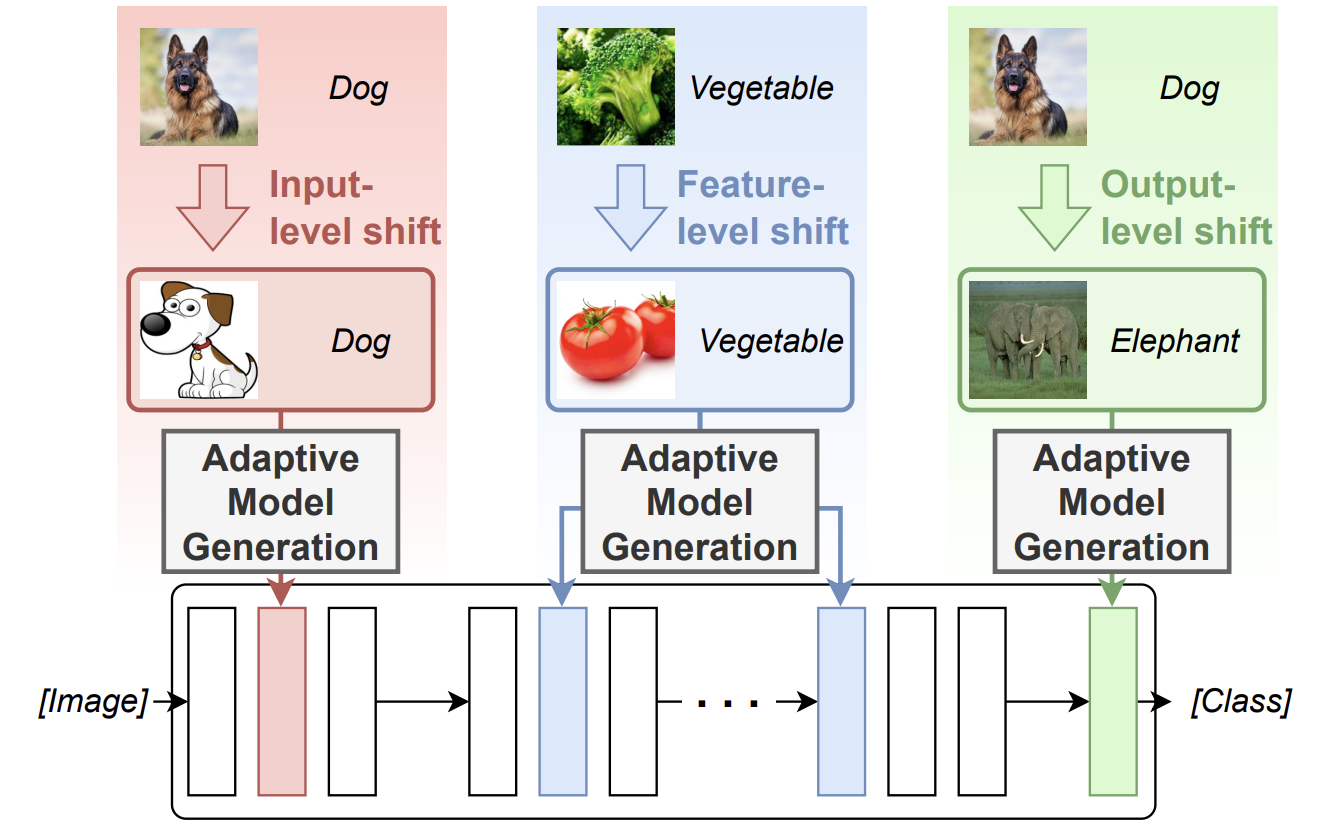

GeneralizeFormer: Layer-Adaptive Model Generation across Test-Time Distribution Shifts

We consider the problem of test-time domain generalization, where a model is trained on several source domains and adjusted on target domains never seen during training. Different from the common methods that fine-tune the model or adjust the classifier parameters online, we propose to generate multiple layer parameters on the fly during inference by a lightweight meta-learned transformer, which we call GeneralizeFormer.

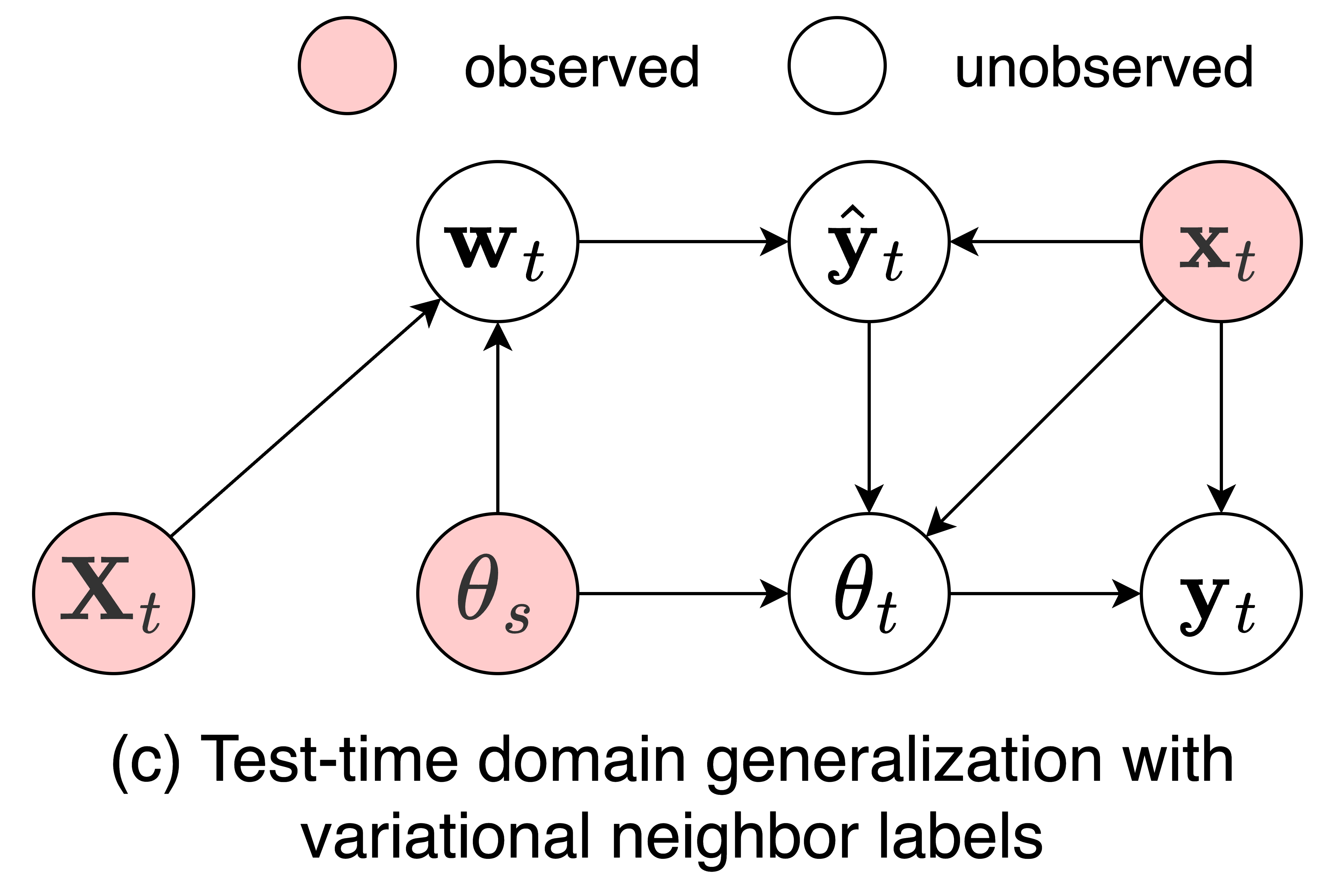

Probabilistic Test-Time Generalization by Variational Neighbor-Labeling

First, we propose probabilistic pseudo-labeling of target samples to generalize the source-trained model to the target domain at test time. We formulate the generalization at test time as a variational inference problem by modeling pseudo labels as distributions to consider the uncertainty during generalization and alleviate the misleading signal of inaccurate pseudo labels.

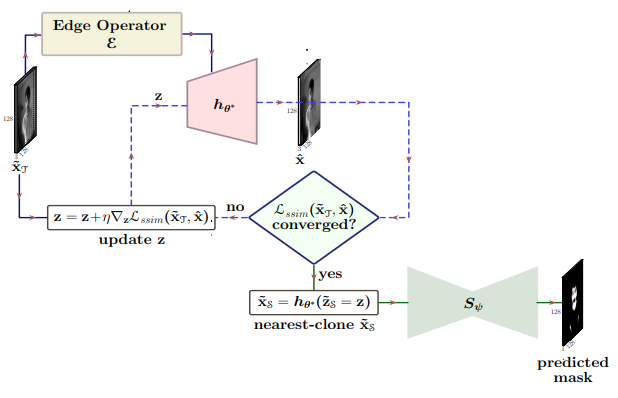

Unsupervised Domain Adaptation for Semantic Segmentation of NIR Images through Generative Latent Search

We cast the skin segmentation problem as that of target-independent Unsupervised Domain Adaptation (UDA) where we use the data from the Red-channel of the visible-range to develop skin segmentation algorithm on NIR images. We propose a method for target-independent segmentation where the 'nearest-clone' of a target image in the source domain is searched and used as a proxy in the segmentation network trained only on the source domain.

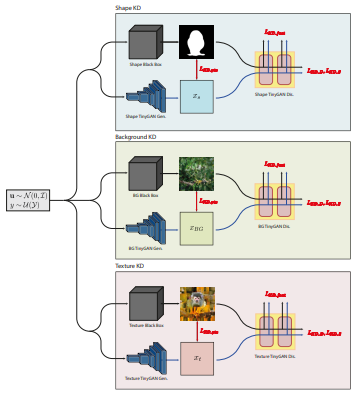

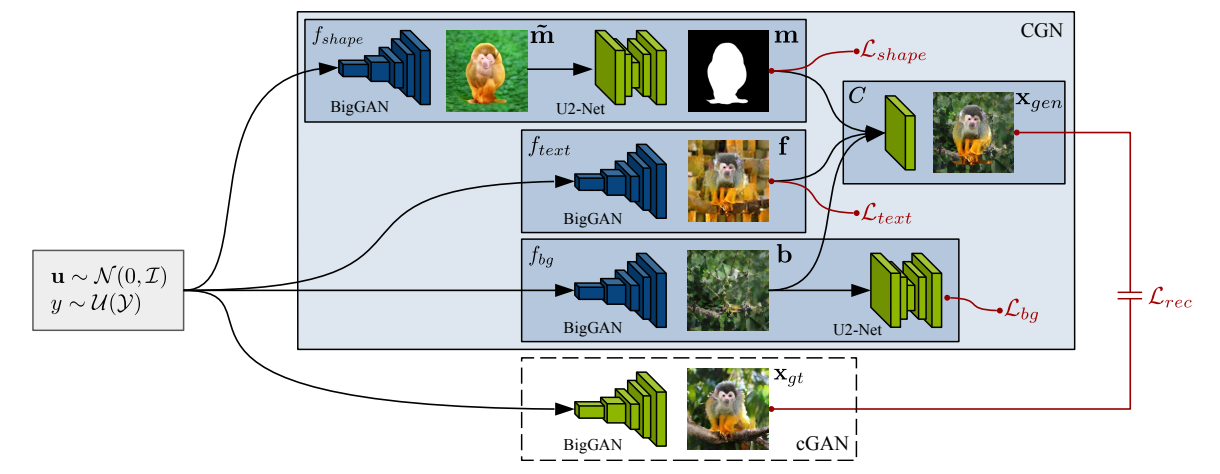

SKDCGN: Source-free Knowledge Distillation of Counterfactual Generative Networks using cGANs

We propose a novel work named SKDCGN that attempts knowledge transfer using Knowledge Distillation (KD). In our proposed architecture, each independent mechanism (shape, texture, background) is represented by a student 'TinyGAN' that learns from the pretrained teacher 'BigGAN'.

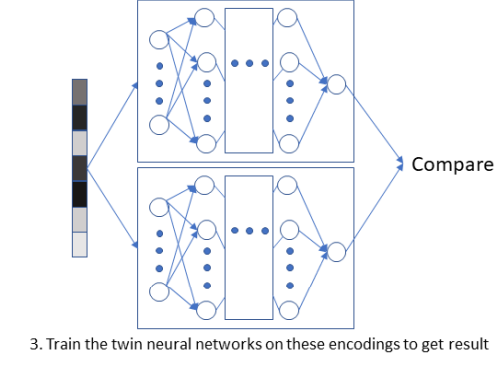

Twin Augmented Architectures for Robust Classification of COVID-19 Chest X-Ray Images

We introduce a state-of-the-art technique, termed as Twin Augmentation, for modifying popular pre-trained deep learning models. Twin Augmentation boosts the performance of a pre-trained deep neural network without requiring re-training.

Education

University of Amsterdam, Research Masters in Artificial Intelligence (MSc AI)

September 2021 - June 2023

I pursued my MSc thesis (48ECTS, Grade: Excellent) to address Test-time Adaptation for Domain Generalization by proposing 2 methods: (i) Formulating it as a Variational Inference problem and meta-learn Variational neighbor labels in a probabilistic framework alongside exploring neighborhood information (ii) Surrogate update of the model without backpropagation.

Courses enrolled: Curriculum based and Research based.

Research Experience

University of Amsterdam, AIM Lab - Research Intern (Deep learning, Computer Vision)

June 2022 - June 2023

Indian Institute of Technology Delhi (IITD), Delhi, India - Research Assistant (Deep learning, Computer Vision)

January 2019 - July 2021

Indian Council of Medical Research (ICMR) NITM Bioinformatics Division, Belgaum, India - Research Trainee

October 2017 - December 2018

DbCom Inc., New Jersey, USA - Remote Intern

June 2015 - December 2016

Scholarships

Recipient of DigiCosme Full Master Scholarship, Université Paris-Saclay, France.

Activities & Leadership

Attended Oxford Machine Learning Summer School (OxML 2020 & OxML 2022), Deep Learning - University of Oxford

Attended PRAIRIE/MIAI PAISS 2021 Machine Learning Summer Learning - INRIA, Naver Labs

Attended Regularization Methods for Machine Learning 2021 (RegML 2021) - University of Genoa

As a part of my recreational activity, I like to play the Indian Flute.

Rotaract Club of GIT

- • Charter Secretary

- • President

He makes good websites

Last updated: